Pea counting 2.0 – How Big Data revolutionizes life (science)

By Gunnar Schulze

09/16/2015

Have you ever felt like your smartphone knows more about your habits than you do yourself? Nowadays, independent of whether we want to efficiently schedule our vacation, find the way to the next organic food store, have a chat with friends, or check the local weather forecast (uncertainty), we intuitively use services (or apps) that rely on large amounts of data – “Big Data“ to use the more popular buzzword. Google, Twitter, Youtube, Facebook and other social media are only the tip of the iceberg. Perhaps somewhat less well-known yet important applications can be found in scientific fields like astrophysics, geography, climate research and health care. No matter where we look – Big Data challenges the way we think about our universe, our planet and ourselves. And these advances did not hold back from biology. It was 150 years ago when augustinian monk and genetics founding father Gregor Mendel counted and compared peas he grew in his garden and based on the occurrence of just two traits (color and shape) worked out the first fundamental laws of genetic inheritance. Today, life scientists collect, store and analyse an ever growing amount of data (billlions of “peas“, if you want) generated by so called high-throughput technologies. At the same time, the traits we look at have changed from colors and shapes to proteins, genes and smaller stretches of DNA sequence, to even single bases (the alphabet of our DNA) that help us decode nature’s messages. The first complete record of a human genome in 2001 was a milestone, providing researchers with a tidal wave of new information that was previously concealed in our DNA (actually it was two different genomes, since scientists love competition[1]) .

But that was even before the era of Big Data as we know it today. For comparison, only 14 years later, we are now talking about tens of thousands of genomes, from all domains of life including other animals, plants and bacteria. What took sequencing pioneers a decade of work can now be accomplished in less than a week – at much lower cost. And there is still room for improvement – taking current and past advances into account, a recent publication[2] predicts that the field of genomics might actually outcompete other data monsters like Google and astrophysics in short time. So what does this whole lot of information gain us? Do we actually understand things better than 150 years ago? Can we read the genome like a twitter feed?

Certainly it is not as easy as that. To make sense of all this data, the field of bioinformatics combines methods from computer science very similar to those of Google and other data giants, with specialized biological knowledge to extract relevant information and convert it into human readable forms. Computers do the heavy lifting of “counting peas“ while data scientists and field experts view, evaluate and apply the results. In this way, Big Data can help detect patterns and reveal relationships that are not visible “by-eye“. It may be the finding of a single point mutation causing a disease (although that is rare) or the question how a single fertilized egg develops into the complex, multi-cellular being we call human. Sometimes we can even make predictions about the future or perform experiments and simulations inside the computer (in-silico) to test out our hypothesis. It seems like whatever it is – come up with a good question and Big Data knows the answer. And yet it is important that computers still need “supervision“. Especially in the large scale DNA and RNA sequencing analyses carried out routinely today, some recent studies have raised concern about data contamination that, if it stays un-detected, can lead to oversights and imporoper conclusions about biolgical and medical results. The more we base our assumptions, predictions and descisions on large amounts of data, the more trust we need to have in this data to avoid misinterpretation.

The good news is that Big Data can help even here if we let it. In the Compuational Biology Unit at the university of Bergen, we are naturally interested in processing large-scale genomic data, get new insights into fundamental biological mechanisms and provide other researchers with high-quality results for biological and medical applications.

In addition we are also interested in resolving some of the problems resulting from data contamination. To that end, combining different types and indendent ressources of data can help us distinguish the information we want from the noise and errors we don’t want. A novel approach to do this in a large-scale way is currently being developed and tested on a number of pre-existing genome datasets and aims at providing an improved knowledge-base for researchers that critically depend on these. The era of Big Data and genome-scale bioinformatics provides diverse opportunities and challenges. Only if we carefully select what and how we feed our computers, will we eventually gain a deeper understanding of ourselves and other organisms to take us a step farther up the road to a chatty, maybe even twittering, genome.

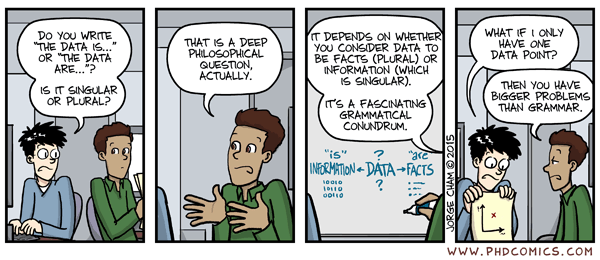

„Piled Higher and Deeper“ by Jorge Cham [www.phdcomics.com]

References

[1] Shampo et al.. Mayo Clinic Proceedings, 2011. J.Craig Venter – Human Genome Project . Link: http://www.ncbi.nlm.nih.gov/pmc/articles/PMC3068906/

[2] Stephens et al. PloS Biology, 2015. Big Data: Astronomical or Genomical? Link: http://journals.plos.org/plosbiology/article?id=10.1371/journal.pbio.1002195